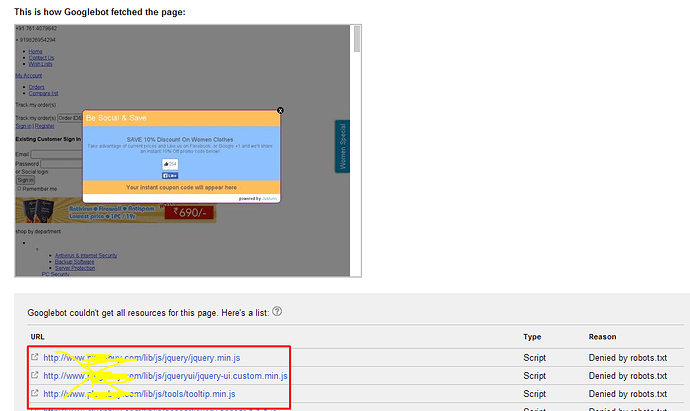

I have seen the Google Fetch and Render option in google webmaster tool. This shows the exact result what google was fetching and if there is any issues, they will let you know. I have found that my robot.txt file blocking css and js file, which result, my rendering looks like a static site. Can anyone try it yourself with your site and see the result.

similar problem for me but doesnt mention being blocked by robots.txt

1 problem for me is print.css though, so no biggy there.

Then Some images temporarily unavailable though, but when clicking on the link the images opens fine in browser…have u checked your robots.txt. in webmaster tools also

John

robots.txt only affects the URL (the page) and not what's linked to it or in it.

[quote name=‘johnbol1’ timestamp=‘1402437846’ post=‘185497’]

similar problem for me but doesnt mention being blocked by robots.txt

1 problem for me is print.css though, so no biggy there.

Then Some images temporarily unavailable though, but when clicking on the link the images opens fine in browser…have u checked your robots.txt. in webmaster tools also

John

[/quote]

It is actually looking like this:

Google unable to access the file due to the robot restrict.

Google just mailed me about all my sites, the following:

[color=#575757][font=arial, sans-serif][size=3]Google systems have recently detected an issue with your homepage that affects how well our algorithms render and index your content. Specifically, Googlebot cannot access your JavaScript and/or CSS files because of restrictions in your robots.txt file. These files help Google understand that your website works properly, so blocking access to these assets can result in sub-optimal rankings.[/size][/font][/color]

[color=#575757][font=arial, sans-serif][size=3]Seems like this is now an issue[/size][/font][/color]

I've received several of these Google emails too. Should we remove the following lines from robots.txt?

Disallow: /design/

Disallow: /js/

[quote name='Flow' timestamp='1438093573' post='224609']

Google just mailed me about all my sites, the following:

[color=#575757][font=arial, sans-serif][size=3]Google systems have recently detected an issue with your homepage that affects how well our algorithms render and index your content. Specifically, Googlebot cannot access your JavaScript and/or CSS files because of restrictions in your robots.txt file. These files help Google understand that your website works properly, so blocking access to these assets can result in sub-optimal rankings.[/size][/font][/color]

[color=#575757][font=arial, sans-serif][size=3]Seems like this is now an issue[/size][/font][/color]

[/quote]

I just received the same message from Google.

The distributed robots.txt file for V4.3.3 only denies access to /app and /store_closed.html

Everything else is allowed (assuming it is allowed via .htaccess files).

It should never see any references to /design so that's probably fine to be there. But I don't know of any reason why you'd want to block them from /js in robots.txt (note that it is accessible via Apache).

Remember that to avoid spoofing, Google tries to render the page itself and so it looks for things like 'display: none' in your html/css to prevent indexing tags that are not visible to the user. Hence I'm guessing it also looks in JS code to see if there's any dynamic hiding/adding of html that might skew your search results. Otherwise there would be no need for them to have direct access to JS.

Older versions of CS block just about everything.

Here's the distributed robots.txt from 4.1 and 4.2 stores:

User-agent: *

Disallow: /images/thumbnails/

Disallow: /app/

Disallow: /design/

Disallow: /js/

Disallow: /var/

Disallow: /store_closed.html

This is what I currently have for 2.2.5. It's too early to tell the consequences at this time.

User-agent: *

Crawl-delay: 3

Disallow: /controllers/

Disallow: /payments/

Disallow: /store_closed.html

Disallow: /core/

Disallow: /lib/

Disallow: /install/

Disallow: /schemas/

Disallow: /index.php

Disallow: /*?

Sitemap: http://www.mysite.com/sitemap.xml

I also created an .htaccess file in /js/ since there wasn't one with the content of…

Options -Indexes[quote name='johnbol1' timestamp='1438187092' post='224802']

Is this different cos is demo ?

[url=“http://demo.cs-cart.com/robots.txt”]Instant Demo - CS-Cart Multi-Vendor Demo Try Free for 15 days

[/quote]

No, that's the standard file in V4.3.3. Looks like cs-cart was ahead of the curve on this one.

so looks like the solution is going to be version dependent. And for older V2/V3 stores, the css can be just about anyplace for the various addon link tags.

[quote]

I also created an .htaccess file in /js/ since there wasn't one with the content of…

Options -Indexes

[/quote]

this is already in my /js folder on v3.06 Whats this do Tool ? stop the browsing

[quote name='johnbol1' timestamp='1438242650' post='224865']

this is already in my /js folder on v3.06 Whats this do Tool ? stop the browsing

[/quote]

[quote]Indexes

If a URL which maps to a directory is requested and there is no DirectoryIndex (e.g., index.html) in that directory, then mod_autoindex will return a formatted listing of the directory.[/quote]

-Options prevents Apache from delivering a directory listing when just the directory is referenced. I.e. yoursie.com/some_dir_of_files

Let me reiterate…

Options -Indexes = Don't list me

Options +Indexes = List me

Oops, meant options -indexes